DeepGate2

Improved with Functionality-aware

About

- PDF Link: here

- Authors: Zhengyuan Shi, Qiang Xu

- Lab: CURE Lab of CUHK

Intriguing Arguments

-

“For example, a NOT gate and its fan-in gate can both have a logic-1 probability of 0.5, but their truth tables are entirely opposite, thus highlighting the inadequacy of this supervision method.”

-

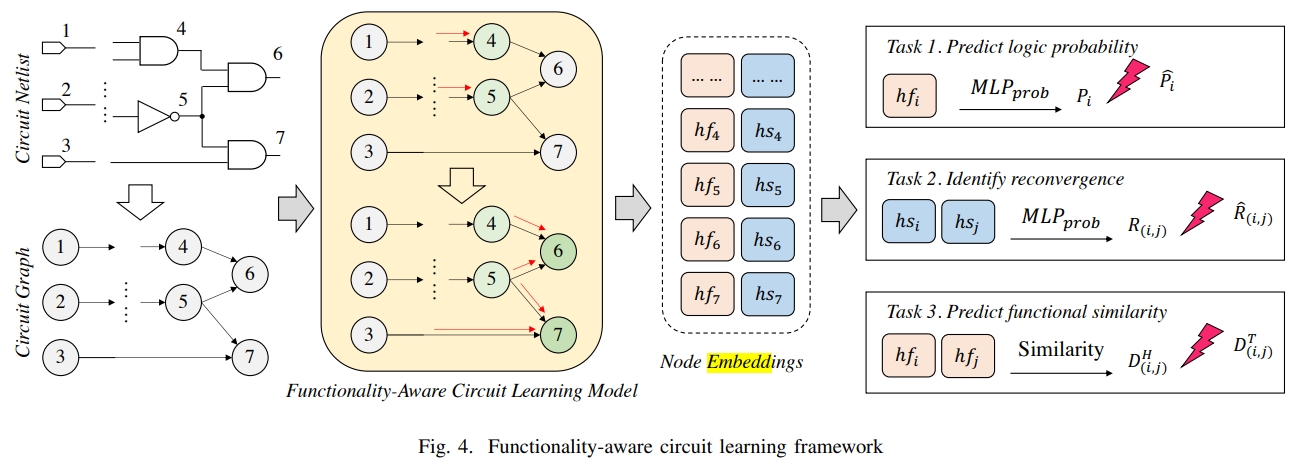

“The circuit representation learning model aims to map both circuit structure and functionality into embedding space, where the structure represents the connecting relationship of logic gates and the functionality means the logic computational mapping from inputs to outputs.”

Innovative Designs

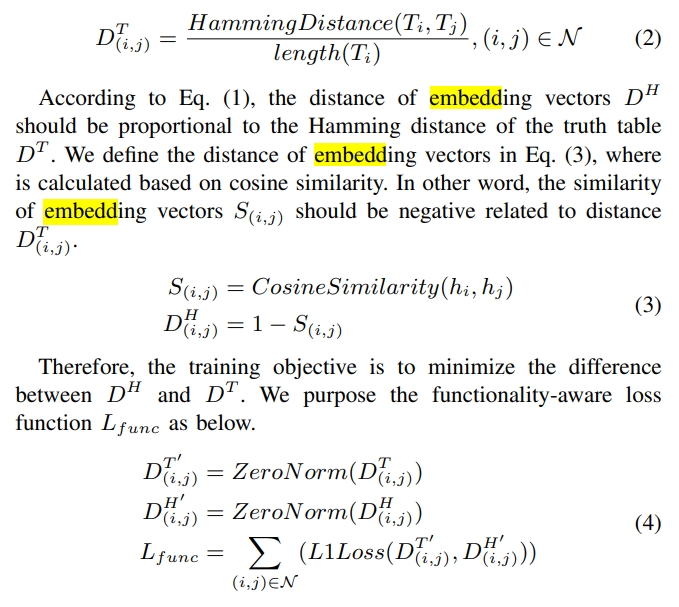

- Replace logic probability with truth table as the new supervision.

- Compose the vector embeddings of a PI of a structural embedding (orthogonal with each other) and a function embedding (the same as all PIs have the same logic probability).

- Divide the training stages into an easy one (logic probability prediction + reconvergence equivalence) and a hard one (+ functionality-aware learning aka distance of truth table).

Diagram

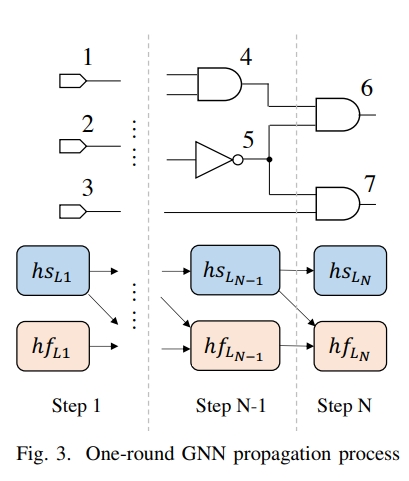

DeepGate2 training process.

Left: One-round propagation process; Right: Functionality-aware loss.

Reference

@inproceedings{shi2023deepgate2,

title={Deepgate2: Functionality-aware circuit representation learning},

author={Shi, Zhengyuan and Pan, Hongyang and Khan, Sadaf and Li, Min and Liu, Yi and Huang, Junhua and Zhen, Hui-Ling and Yuan, Mingxuan and Chu, Zhufei and Xu, Qiang},

booktitle={2023 IEEE/ACM International Conference on Computer Aided Design (ICCAD)},

pages={1--9},

year={2023},

organization={IEEE}

}